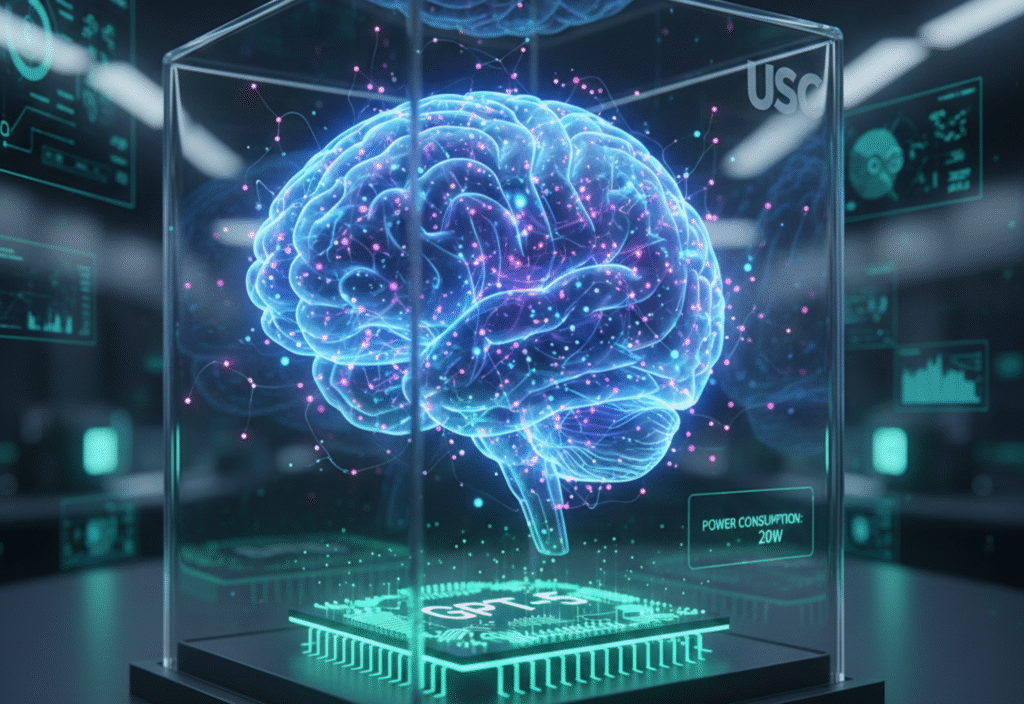

USC artificial neurons are emerging as a breakthrough technology that could redefine how powerful AI systems operate. Researchers at the University of Southern California have developed brain-inspired artificial neurons that dramatically reduce energy consumption, making it possible for future models like GPT-5 to run on as little as 20 watts. This shift challenges the long-standing belief that more intelligent AI must consume more power and opens the door to ultra-efficient, sustainable artificial intelligence.

USC researchers have developed artificial neurons inspired by the human brain that dramatically reduce energy consumption while preserving computational capability. If scaled successfully, this technology could allow future models comparable to GPT-5 to run on as little as 20 watts of power, opening the door to powerful AI on laptops, edge devices, and even mobile hardware.

This article explores how USC’s artificial neurons work, why they matter, and what they could mean for the future of large language models, AI infrastructure, and global energy consumption.

The Energy Crisis of Modern AI

Modern AI systems are incredibly powerful—but also incredibly expensive to run.

Training and operating state-of-the-art LLMs requires:

Massive GPU or TPU clusters

Continuous high-bandwidth memory access

Energy-intensive matrix computations

Dedicated cooling infrastructure

Even inference—the act of simply using a trained model—can consume hundreds of watts per server. As models approach trillions of parameters, energy efficiency has become one of the biggest bottlenecks in AI progress.

This creates three major problems:

Rising costs that limit AI access to large corporations

Environmental impact due to massive electricity usage

Deployment limits, preventing advanced AI from running on local or edge devices

USC’s artificial neuron research directly targets these challenges.

What Are USC’s Artificial Neurons?

Unlike traditional digital neural networks that rely on software running on power-hungry hardware, USC’s approach focuses on neuromorphic engineering—hardware that mimics how biological neurons process information.

Key characteristics of these artificial neurons include:

Analog computation, rather than purely digital operations

Event-driven processing, meaning energy is only used when signals are active

Brain-like signal propagation, similar to synapses and spikes

Minimal data movement, reducing one of the biggest sources of power loss

In the human brain, approximately 86 billion neurons operate together using just 20 watts of power. USC’s work attempts to replicate this efficiency at a hardware level, not just through better algorithms.

Why Traditional AI Hardware Is So Inefficient

Current AI systems rely on the von Neumann architecture, where memory and computation are physically separated. This causes constant data shuffling between components, which consumes enormous amounts of energy.

Problems with conventional AI hardware include:

Heavy reliance on floating-point operations

Continuous clock cycles, even when no meaningful computation occurs

Redundant calculations during inference

Poor scalability for real-time intelligence

Artificial neurons bypass many of these limitations by combining memory and computation, similar to how biological neurons function.

How Artificial Neurons Could Enable GPT-5 at 20 Watts

The idea of running a GPT-5-level model on 20 watts doesn’t mean today’s GPT-5 architecture would magically shrink overnight. Instead, it suggests a new generation of AI systems built from the ground up on neuromorphic principles.

Here’s how USC’s artificial neurons make this possible:

1. Massive Reduction in Power per Operation

Artificial neurons perform computations using physical properties like voltage changes rather than energy-intensive digital math. This dramatically reduces power usage per inference step.

2. Sparse and Event-Driven Activation

Unlike LLMs that activate millions of parameters for every token, neuromorphic systems activate only the neurons that are needed, reducing wasted computation.

3. On-Chip Learning and Memory

By storing weights and logic together, artificial neurons minimize memory access, one of the biggest energy drains in modern AI hardware.

4. Parallelism Without Heat Explosion

Brain-inspired systems scale horizontally without the thermal penalties seen in GPU clusters.

When combined, these factors make it plausible for future AI models with GPT-5-level reasoning capabilities to operate within mobile-class power budgets.

What This Means for the Future of AI

If USC’s artificial neuron technology matures and scales, the implications are enormous.

AI Without Data Centers

Advanced AI could run locally on:

Laptops

Smartphones

Autonomous robots

Smart home devices

Medical equipment

This would reduce dependence on cloud infrastructure and improve privacy.

Edge AI Becomes Truly Intelligent

Instead of sending data to the cloud, devices could reason, plan, and adapt locally in real time.

Sustainable AI Development

Energy-efficient AI could dramatically cut carbon emissions associated with training and inference, making large-scale AI environmentally viable.

Democratization of AI

Powerful models would no longer be limited to companies with billion-dollar infrastructure budgets.

Challenges That Still Remain

While the promise is enormous, several hurdles must be overcome:

Scalability: Moving from lab-scale neurons to billions of artificial units

Software compatibility: Rewriting AI frameworks for neuromorphic hardware

Manufacturing complexity: Producing reliable neuromorphic chips at scale

Model redesign: LLMs must be re-architected, not just ported

USC’s work represents a foundation—not a finished product.

A Shift Bigger Than GPUs

The AI industry has spent the last decade optimizing GPUs and accelerators. USC’s artificial neurons suggest something more radical: a complete architectural shift away from brute-force computation toward brain-inspired efficiency.

Just as GPUs once replaced CPUs for deep learning, neuromorphic systems may eventually replace GPUs for reasoning-heavy AI models.

Conclusion

USC’s artificial neuron research points toward a future where GPT-5-level intelligence no longer requires massive power consumption. By mimicking the efficiency of the human brain, these neurons could enable advanced AI systems to run on as little as 20 watts, transforming how and where AI is deployed.