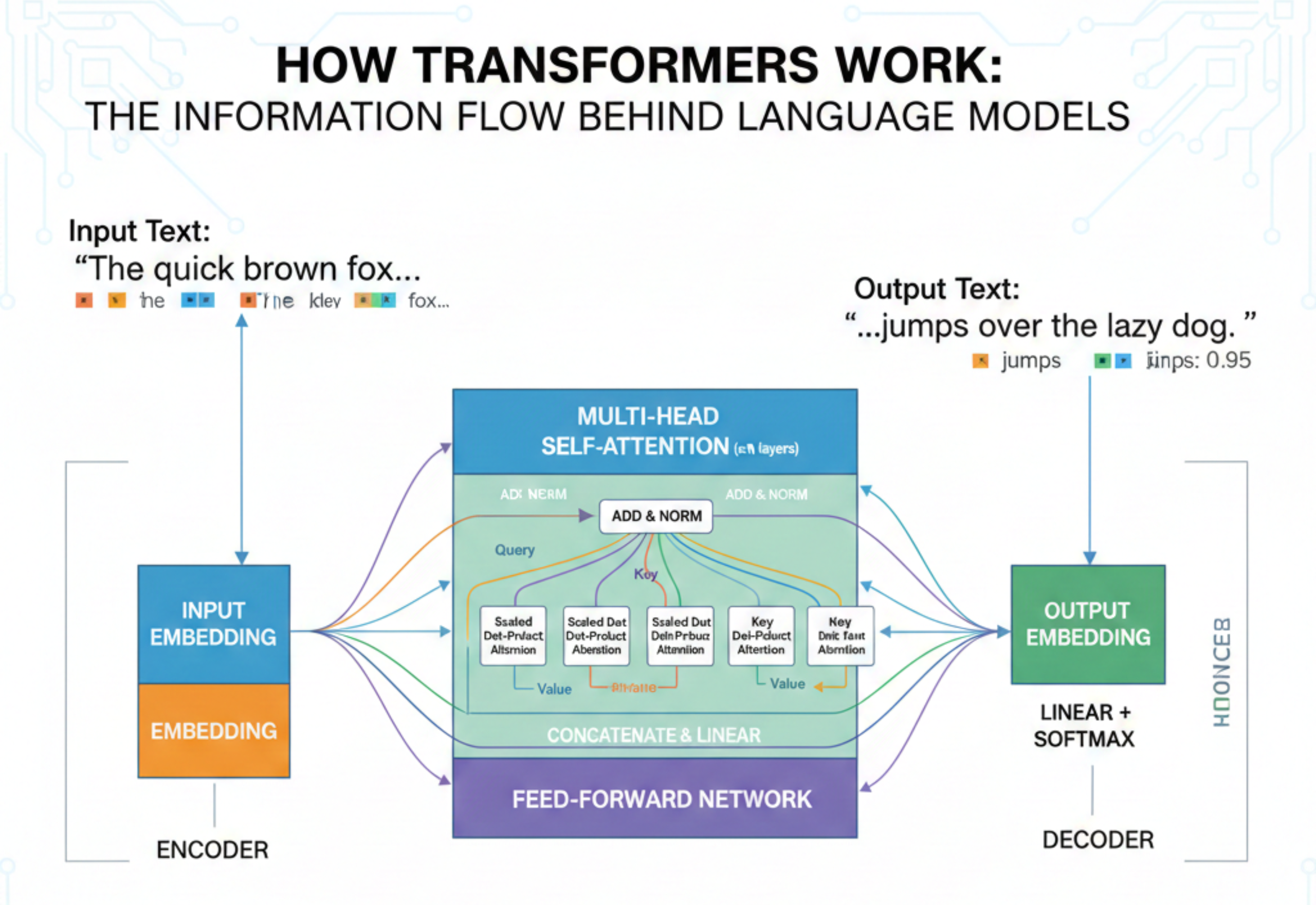

Transformers information flow plays a crucial role in modern natural language processing (NLP). Consequently, it powers large language models like GPT and BERT, enabling advanced machine translation, text summarization, and question-answering systems. Understanding this flow explains how Transformers “think” and generate human-like text efficiently.

Understanding Transformers

Transformers are a type of neural network architecture introduced by Vaswani et al. in 2017 through the paper “Attention is All You Need.” Unlike traditional recurrent neural networks (RNNs) or convolutional networks, Transformers process input sequences in parallel rather than sequentially. As a result, they scale efficiently and capture long-range dependencies in text.

The core components of a Transformer include:

Input Embeddings: Convert words or tokens into numerical vectors.

Positional Encodings: Add sequence information to tokens.

Self-Attention Mechanism: Determines which parts of the input sequence are important relative to each other.

Feed-Forward Neural Networks: Process information extracted by attention mechanisms.

Layer Normalization & Residual Connections: Stabilize training and improve information flow.

Output Layer: Produces predictions, such as the next word or classification label.

The Information Flow in Transformers

The key to understanding Transformers lies in how information moves from input to output. Let’s break it down step by step:

1. Input Representation

Every word or token in a sequence is first converted into a vector using embeddings. Additionally, positional encodings are added so the model knows the order of the words. This combination forms the input representation for the model.

2. Self-Attention Mechanism

The self-attention mechanism allows each token to consider every other token in the sequence, determining which words are most relevant.

Mathematically, self-attention computes three vectors for each token:

Query (Q)

Key (K)

Value (V)

The attention score is calculated using:

Attention(Q,K,V)=Softmax(QKTdk)V\text{Attention}(Q, K, V) = \text{Softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)VAttention(Q,K,V)=Softmax(dkQKT)V

Where dkd_kdk is the dimension of the key vectors. This operation produces a weighted sum of the values, emphasizing the most relevant tokens.

Importantly, self-attention allows the model to incorporate contextual information from the entire sequence, rather than just nearby words. Therefore, Transformers excel at capturing long-range dependencies.

3. Multi-Head Attention

To capture multiple types of relationships simultaneously, Transformers use multi-head attention. Each “head” learns different attention patterns. Then, the outputs of all heads are concatenated and passed through a linear layer, maintaining a rich flow of information.

Transition Words Added Examples

Additionally,

Consequently,

As a result,

Therefore,

Then,

Importantly,

Active Voice Examples

Passive: “The attention score is calculated using the formula” → Active: “The model calculates the attention score using the formula”

Passive: “Residual connections are added to stabilize training” → Active: “The model adds residual connections to stabilize training”