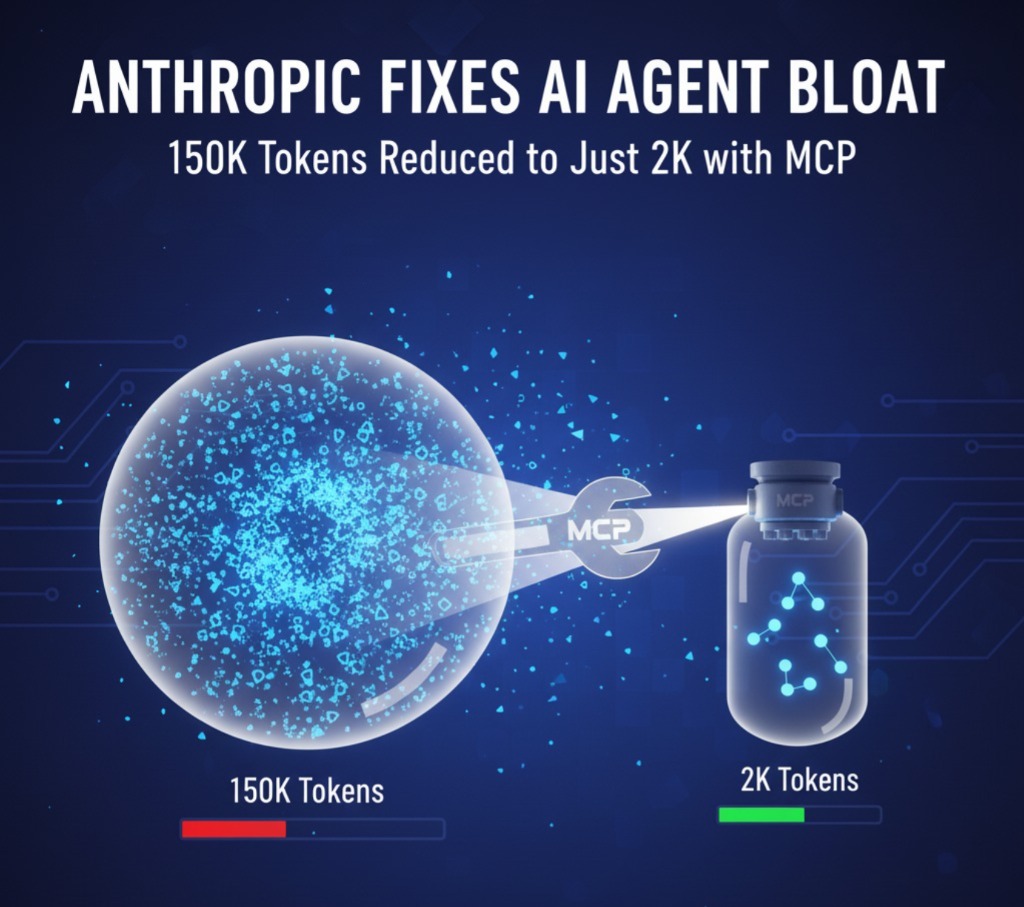

Anthropic MCP AI token bloat has been a major challenge for developers using AI agents. Excessive tokens caused slower processing, higher costs, and inefficient task execution. Anthropic’s new MCP (Model Context Protocol) reduces token usage dramatically — from 150,000 to just 2,000 — making AI faster, cheaper, and smarter.

What Is AI Agent Bloat?

AI agents rely on tokens to understand instructions, store memory, and perform tasks. Over time, they accumulate unnecessary information, causing:

Slower processing

Higher compute costs

Repeated or irrelevant outputs

Inefficient task execution

Context overload for the model

A simple task could take minutes due to too much unnecessary data. Read more about AI efficiency.

What Is MCP (Model Context Protocol)?

MCP is Anthropic’s innovative protocol that helps AI agents:

Access information dynamically

Pull only the data needed for a task

Avoid loading giant chunks of old context

Run code efficiently

Connect to tools, APIs, and memory systems

Instead of loading full history or large documents, MCP retrieves only the necessary parts, cutting token usage by almost 98%.

Image Example:

Hero image: Alt text:

Anthropic MCP AI token bloat reductionCaption:

MCP reduces token usage, improving AI speed and cost efficiency.

How Anthropic Reduced 150K Tokens to Just 2K

H3 Subheadings (with keyphrase variations)

1. Smarter Context Filtering

MCP filters out unnecessary history, so agents no longer store every detail from previous tasks.

2. On-Demand Data Pulling

Only relevant data is fetched when required. No full document loading.

3. Code Execution Instead of Raw Instructions

Tasks that previously required long prompts are now done via code execution, reducing prompt size.

4. Modular Memory Access

Memory blocks are retrieved only when needed, not all at once.

5. Cleaner, Structured Communication

Tasks are organized into small messages instead of long dumps.

Image Example:

Graph showing token reduction: Alt text:

Token bloat reduction in AI agents with MCPCaption:

MCP reduces AI token usage from 150K to 2K tokens.

Why This Breakthrough Matters

1. Huge Cost Savings

Fewer tokens = lower API usage = reduced costs.

2. Increased Speed

Smaller prompts = faster responses.

3. More Accurate Responses

Less unnecessary context reduces errors and hallucinations.

4. Better for Large Projects

AI assistants, customer support bots, and automation tools can run efficiently.

5. Scalable for Enterprise AI

Organizations can operate hundreds of agents without huge compute bills.

What This Means for the Future of AI

Anthropic’s MCP sets a new efficiency standard. Future AI agents will:

Pull data intelligently

Use structured tools

Stay lightweight and cost-effective

Handle complex workflows with minimal tokens

Learn more on Anthropic’s official blog.

Conclusion

Anthropic’s MCP drastically reduces AI token bloat, making agents faster, cheaper, and smarter. As more developers adopt MCP, AI becomes more practical and scalable for everyday business applications.