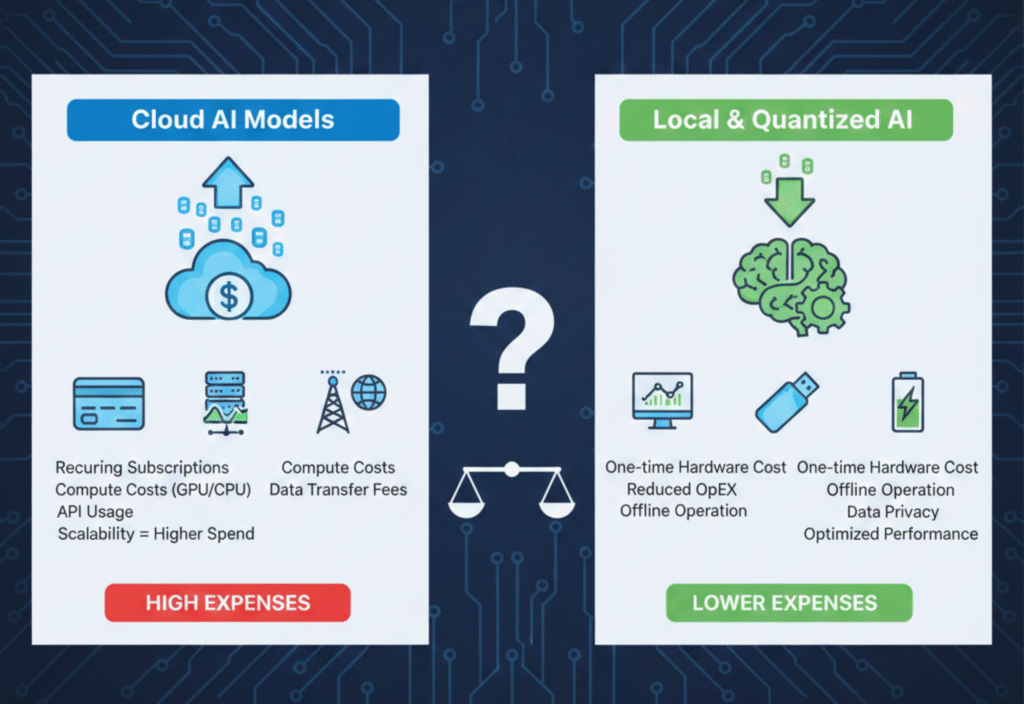

AI expenses comparison has become a critical topic as businesses increasingly rely on artificial intelligence for automation, analytics, and decision-making. While AI promises efficiency and innovation, the real cost depends heavily on how models are deployed—whether through cloud-based services, local infrastructure, or quantized alternatives. Understanding these cost differences early helps teams avoid unexpected expenses and build more sustainable AI systems.

Understanding AI Cost Beyond Just Price”

When people think about AI costs, they usually focus on API pricing or GPU hardware. In reality, AI expenses include multiple hidden factors:

Infrastructure and compute

Scalability and latency

Maintenance and updates

Data privacy and compliance

Energy consumption

Long-term operational costs

Each deployment approach handles these costs very differently.

Cloud AI Models: Convenience at a Premium

Cloud-based AI models (such as API-hosted LLMs or vision models) are the most popular choice today—and for good reason.

Advantages of Cloud AI Models

Zero setup required: No hardware or ML expertise needed

Instant scalability: Handle spikes in usage easily

Continuous improvements: Providers update models automatically

High accuracy: Access to state-of-the-art models

The Real Cost of Cloud AI

Despite the convenience, cloud models come with ongoing and often underestimated expenses:

Pay-per-token or request pricing quickly adds up at scale

High inference costs for real-time or high-volume applications

Vendor lock-in, making migration difficult later

Data privacy concerns, especially for sensitive or regulated data

Latency issues due to network dependency

Best for: Prototypes, early-stage startups, low-volume usage, and teams without ML infrastructure.

Local AI Models: Control with Responsibility

Local models run on your own servers, GPUs, or even high-end consumer hardware. This approach is gaining popularity as open-source AI models improve rapidly.

Advantages of Local AI Models

No per-request cost once infrastructure is set up

Full control over data and privacy

Lower latency for on-device or internal systems

Freedom to customize and fine-tune models

Hidden Costs of Local AI

While local deployment avoids API fees, it introduces new expenses:

Upfront hardware investment (GPUs, servers, cooling)

Ongoing electricity and maintenance costs

Engineering expertise required for deployment and optimization

Scaling limitations during traffic spikes

Manual model updates and monitoring

Best for: Companies with steady workloads, privacy-sensitive data, and in-house technical expertise.

Quantized Models: The Cost-Efficiency Sweet Spot

Quantization reduces model precision (for example, from 16-bit to 8-bit, 4-bit, or even 2-bit), dramatically lowering resource usage while preserving most performance.

Why Quantized Models Are Game-Changers

Up to 50–80% lower memory usage

Faster inference on CPUs and smaller GPUs

Lower energy consumption

Enables AI on edge devices and low-cost hardware

Significantly reduced infrastructure costs

Trade-Offs of Quantized Models

Slight accuracy degradation (often negligible)

More testing required to find optimal precision

Not all models support extreme quantization well

Despite these challenges, modern quantization techniques have made this approach surprisingly robust and production-ready.

Best for: Cost-sensitive applications, edge AI, startups scaling up, and teams replacing expensive cloud inference.

Cost Comparison Overview

| Factor | Cloud Models | Local Models | Quantized Models |

|---|---|---|---|

| Upfront Cost | Low | High | Low–Medium |

| Ongoing Cost | High | Medium | Very Low |

| Scalability | Excellent | Limited | Moderate |

| Data Privacy | Low | High | High |

| Performance per Dollar | Medium | High | Very High |

| Vendor Lock-In | High | None | None |

Which Option Is Right for You?

There is no universal “best” choice—only the best fit for your use case.

Choose cloud models if speed, simplicity, and cutting-edge performance matter most.

Choose local models if you need control, privacy, and predictable workloads.

Choose quantized models if you want maximum efficiency, lower costs, and scalable AI without massive infrastructure.

Many successful teams now use a hybrid approach, combining cloud models for complex tasks and quantized local models for routine inference.

Final Thoughts: The Future Is Cost-Aware AI

As AI adoption grows, cost efficiency will become just as important as model accuracy. Quantized and local models are no longer “inferior alternatives”—they are strategic tools for building sustainable AI systems.